728x90

반응형

알렉스넷 아키텍처 신경망 구성하기

import tensorflow as tf

import numpy as np

X=np.random.randint(0,256,(5000,227,227,3))

YT=np.random.randint(0,1000,(5000,))

x=np.random.randint(0,256,(1000,227,227,3))

yt=np.random.randint(0,1000,(1000,))

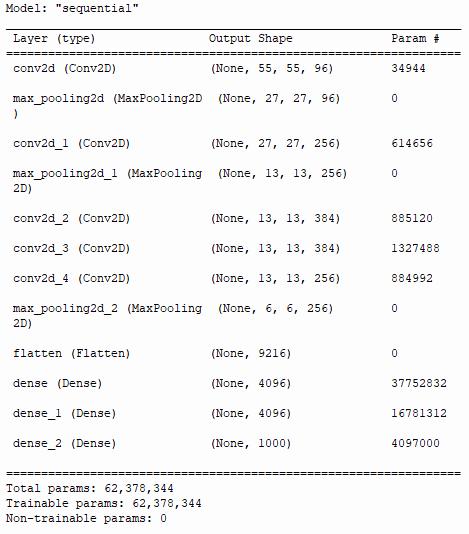

model = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape=(227,227,3)),

tf.keras.layers.Conv2D(96,(11,11),(4,4),activation='relu'),

tf.keras.layers.MaxPooling2D((3,3),(2,2)),

tf.keras.layers.Conv2D(256,(5,5),(1,1),activation='relu',padding='same'),

tf.keras.layers.MaxPooling2D((3,3),(2,2)),

tf.keras.layers.Conv2D(384,(3,3),(1,1),activation='relu',padding='same'),

tf.keras.layers.Conv2D(384,(3,3),(1,1),activation='relu',padding='same'),

tf.keras.layers.Conv2D(256,(3,3),(1,1),activation='relu',padding='same'),

tf.keras.layers.MaxPooling2D((3,3),(2,2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(4096,activation='relu'),

tf.keras.layers.Dense(4096,activation='relu'),

tf.keras.layers.Dense(1000,activation='softmax')

])

# model.summary()

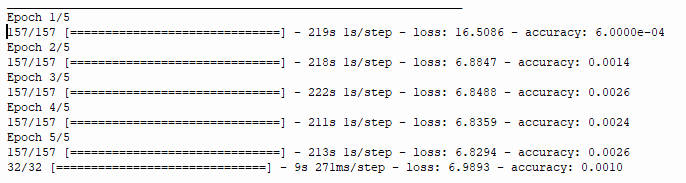

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X,YT,epochs=5)

model.evaluate(x,yt)

신경망 구성 후 배치 정규화, 드롭아웃 적용하기

BatchNormalization(배치 정규화)은 활성화 함수(Activation Function) 전에 사용함

ex. tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

import tensorflow as tf

mnist=tf.keras.datasets.cifar10

(X,YT),(x,yt)=mnist.load_data()

X,x=X/255, x/255

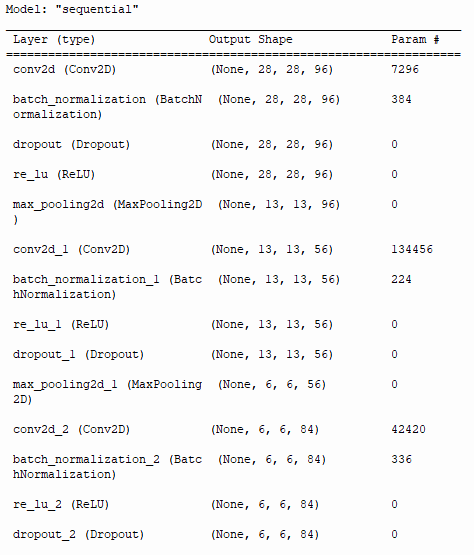

model = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape=(32,32,3)),

tf.keras.layers.Conv2D(96,(5,5),(1,1)),

tf.keras.layers.BatchNormalization(), # 배치 정규화 : 데이터의 분포가 흐트러질때 재조정해주는 기능

tf.keras.layers.Dropout(0.2), # 레이어당 랜덤하게 학습하여 학습 데이터의 정확도를 올리는 것

tf.keras.layers.ReLU(),

tf.keras.layers.MaxPooling2D((3,3),(2,2)),

tf.keras.layers.Conv2D(56,(5,5),(1,1),activation='relu',padding='same'),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.MaxPooling2D((3,3),(2,2)),

tf.keras.layers.Conv2D(84,(3,3),(1,1),activation='relu',padding='same'),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Conv2D(84,(3,3),(1,1),activation='relu',padding='same'),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Conv2D(56,(3,3),(1,1),activation='relu',padding='same'),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.MaxPooling2D((3,3),(2,2)),

tf.keras.layers.Flatten(),

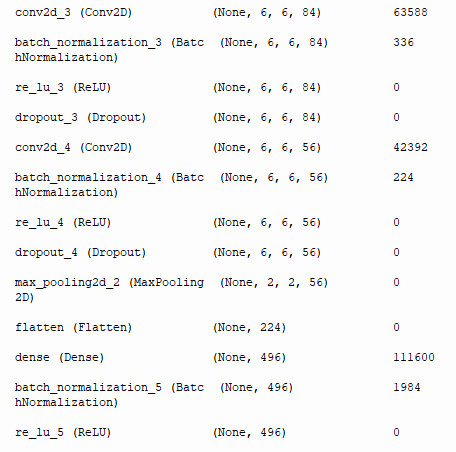

tf.keras.layers.Dense(496),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

tf.keras.layers.Dense(96),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.ReLU(),

tf.keras.layers.Dense(10,activation='softmax')

])

model.summary()

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

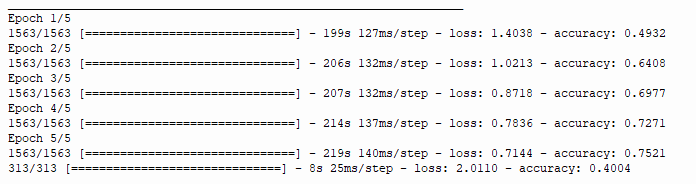

model.fit(X,YT,epochs=5)

model.evaluate(x,yt)

728x90

반응형

'즐거운프로그래밍' 카테고리의 다른 글

| [딥러닝] 사용자 데이터로 CNN 학습하기- 1. 라벨링하기 (0) | 2023.11.06 |

|---|---|

| [딥러닝] VGG16 바탕으로 신경망 구성하기, 배치 정규화, 드롭아웃 적용하기 (0) | 2023.11.02 |

| [딥러닝] 딥러닝 모델 학습 후 저장하고 필터 이미지 추출하기 (1) | 2023.11.02 |

| [딥러닝] CNN 활용 예제(엔비디아 자료 보고 신경망 구성하기) (1) | 2023.11.01 |

| [딥러닝] CNN 활용 맛보기 2 (0) | 2023.11.01 |